AWS Managed Airflow vs AWS Step Functions

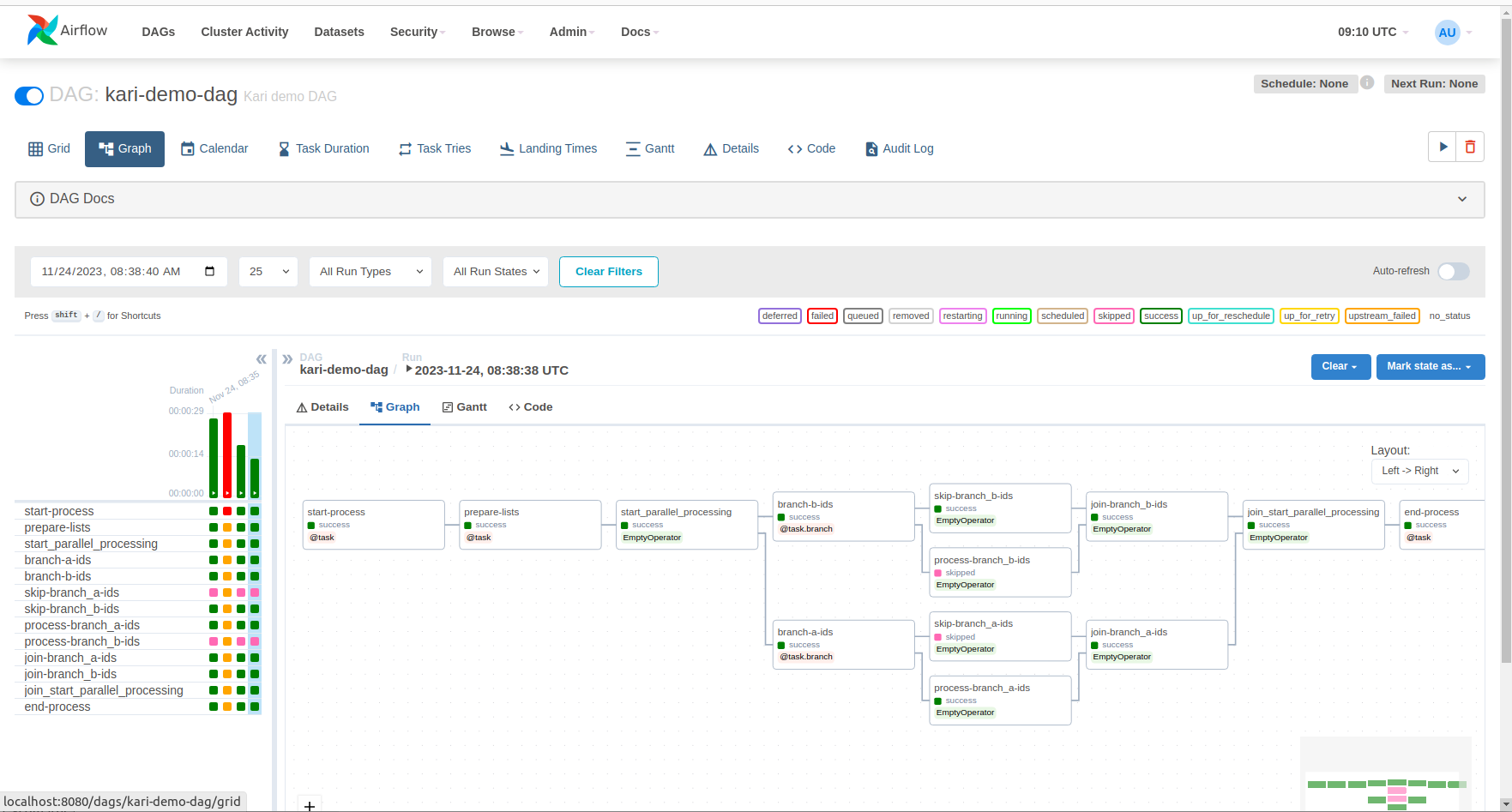

Airflow Standalone UI.

Introduction

In my previous blog post AWS Step Functions - First Impressions I wrote about AWS Step Functions - an AWS native orchestration service that lets you combine various AWS services as part of your process. In this new blog post I compare AWS Step Functions and Amazon Managed Workflows for Apache Airflow.

What is Amazon Managed Workflows for Apache Airflow?

Amazon Managed Workflows for Apache Airflow is an orchestration service like AWS Step Functions, that lets you define complex processes consisting of individual tasks (as steps in Step Functions). You can define standard Airflow tasks, or use various operators (like Python operator, Bash operator, AWS Lambda operator, AWS ECS Task operator, etc.). On AWS, you can define the same process using either Step Functions or AWS Managed Airflow.

In my current project, I have used both AWS Step Functions and AWS Managed Airflow to define complex processes. In the following chapters I explain some differences between the tools, to help you decide which one to use on AWS.

Infrastructure Code

I implemented both AWS Managed Airflow and AWS Step Functions infrastructure using AWS Serverless Application Model (which is basically AWS CloudFormation, you can read more about using SAM in my previous blog post Using AWS Serverless Application Model (SAM) First Impressions).

Both services were a bit complex to set up using SAM/Cloudformation, but not too difficult. There are good tutorials and examples for both services, you should use utilize those tutorials and examples.

When I now compare the two SAM configurations, I see that there are some 40 lines of code in the Step Functions template.yaml file (that related directly setting up the Step Functions service), and some 160 lines of code in the AWS Managed Airflow template.yaml file (that related directly setting up the AWS Managed Airflow service). Here is a short list of resources you need to set up in both sides:

AWS Step Functions:

AWS::Serverless::StateMachine, and itsDefinitionSubstitutions,Logging, andPolicies.

AWS Managed Airflow:

AWS::S3::Bucketfor AWS Managed Airflow.AWS::IAM::Rolefor Airflow Execution.AWS::IAM::ManagedPolicyfor Airflow Execution.AWS::EC2::SecurityGroupandAWS::EC2::SecurityGroupIngressfor Airflow access.AWS::MWAA::Environment, and itsPolicyDocument(allow access to the bucket, accessing CloudWatch logs, some SQS management, some KMS management, etc.): the actual AWS Managed Airflow resource.

So, there is a bit more to setup in the Airflow side, but that is a one-time task, and not too difficult using the tutorials and examples.

Process Definition

The main difference between AWS Managed Airflow and AWS Step Functions is that using AWS Step Functions you define your process as a JSON document. There is a nice GUI editor as well, and you should use that when designing the crude version of your process.

The main advantage to use AWS Managed Airflow is that you can use Python to define your process and tasks. The overall view of the process is a lot more easier to grasp with one view in the Airflow process side compared reading the whole AWS Step Functions long JSON file. This is an example of a direct asyclic graph (DAG) that Airflow uses to define the process:

start_process >> prepare_lists

prepare_lists >> start_parallel_processing

start_parallel_processing >> [branch_a_ids, branch_b_ids]

branch_a_ids >> [skip_branch_a_ids, process_branch_a_ids]

branch_b_ids >> [skip_branch_b_ids, process_branch_b_ids]

skip_branch_a_ids >> join_branch_a_ids

skip_branch_b_ids >> join_branch_b_ids

process_branch_a_ids >> join_branch_a_ids

process_branch_b_ids >> join_branch_b_ids

join_branch_a_ids >> join_start_parallel_processing

join_branch_b_ids >> join_start_parallel_processing

join_start_parallel_processing >> end_process

If you look at this code snippet and the picture in the beginning of this blog post you immediately see what is happening in the Python DAG code.

Personally, I think it was a lot easier to define the process using Airflow than using Step Functions.

Defining Tasks / Steps

When using Step Functions you define the steps as part of the JSON process. An example defining a step that calls an AWS Lambda:

"my-lambda": {

"Type": "Task",

"Resource": "arn:aws:states:::lambda:invoke",

"Parameters": {

"Payload.$": "$",

"FunctionName": "${MyLambda}"

},

"Next": "end-my-lambda-pass",

"ResultPath": "$.fetch-lambda"

}

Using AWS Managed Airflow the same definition:

my_lambda = LambdaInvokeFunctionOperator(

task_id='my-lambda',

function_name=AIRFLOW_VAR_MY_LAMBDA_NAME,

payload="\{\{ti.xcom_pull(task_ids='start-process', key='payload_my_lambda')}}",

dag=dag,

)

I think it is more natural to define the tasks on the Airflow side since you can use a natural programming language. (NOTE: I had to add \{\{ instead of just two curly braces since the markdown file did not show the line without the backslashes, i.e. the backslashes are not actually needed in the Python code. Airflow uses jinja templates in the operators.)

Processing and Passing Data Between Tasks / Steps

This is where Airflow really shines compared to Step Functions. When using Step Functions you are restricted to use the JSON operators and some so-called Intrinsic functions provided by the Step Functions environment. If you need to do some data manipulation between the steps and the Step Functions environment does not provide a method for that kind of data manipulation, you have to create a custom AWS Lambda to do the data manipulation. This is really awkward. Example. I just couldn’t find a way to merge two lists using the Step Functions data manipulation methods. Therefore I had to setup the whole AWS Lambda infrastructure to pass two lists to the Lambda, let the Lambda to do one line merge operation and return the merged list.

Using Airflow you can just use Python to do simple things like merging two lists:

@task(task_id='prepare-lists')

def prepare_lists_task(dag_run=None, ti=None):

print('Starting task: prepare-lists')

branch_a_ids = dag_run.conf.get('branch_a_ids')

branch_b_ids = dag_run.conf.get('branch_b_ids')

merged_ids = branch_a_ids + branch_b_ids

ti.xcom_push(key='merged_ids', value=merged_ids)

return merged_ids

Using Airflow, you pass data between the task using XComs. You can see in the code snippet above an example how to publish some data from the task: ti.xcom_push(key='merged_ids', value=merged_ids). You can pull this data from other tasks like: merged_list = ti.xcom_pull(task_ids='prepare-lists', key='merged_ids').

User Interface

AWS provides good interfaces for both services. The AWS Managed Airflow GUI is pretty much the same as the Airflow standalone GUI you can see in the beginning of this blog post.

Developer Experience

Personally, I prefer the Airflow developer experience over Step Functions. The process definition is nicer to implement using Python, and you can use Python in the tasks as well.

One major advantage is also the Airflow Standalone, which you can use to implement the overall version of your process, and then continue implementing the AWS related operators in the AWS Managed Airflow side.

You can easily send events to the Airflow Standalone, example:

airflow dags trigger -c "{\"flag\": \"kari-debug\", \"branch_a_ids\": [111, 222], \"branch_b_ids\":[]}" kari-demo-dag

When you edit the DAG files in Airflow Standalone, the server immediately updates the DAG in the server. This is a major pro for Airflow developer experience. Updating the definitions for both AWS Managed Airflow and AWS Step Functions require considerably longer development feedback.

Some Other Considerations

There are other considerations than just the developer experience, however.

Costs. AWS Step Functions is a serverless service and you don’t pay infrastructure costs - you just pay for running the processes on the service. For AWS Managed Airflow, you have to create the Airflow infrastructure, which creates development costs. And also running the infrastructure, even you would not run any processes on it, has some considerable costs. And most probably you want to have exact copies of your infrastructure for various environments: development, developer testing, CI testing, performance testing, customer testing, and production - you need to setup a rather expensive service infrastructure for all those environments. I recommend you to consult the AWS Price Calculator for AWS Managed Airflow costs - the costs depend on your needs (a more performant environment costs more).

Security. This is a major disadvantage against using AWS Managed Airflow compared to AWS Step Functions. With Step Functions, every process is its own entity with a process executor. I.e., you can assign a separate IAM Role for running each separate process in the AWS Step Functions. Not so with AWS Managed Airflow. With AWS Managed Airflow, you create one Airflow Executor IAM Role, and you run all your DAGs (Airflow processes) with this IAM Role. For some Airflow DAGs this is not a problem. E.g., if you just compose your process using e.g. ECS operators, your ECS Task Definitions have dedicated IAM Roles (least privilege to have rights to access only those resources the ECS task needs to access). But for Python operator and most of the other Airflow operators, you run all the operators with the same common Airflow Executor IAM Role. This is a bit of a nuisance and it also violates the principle of least privilege. E.g., if you have separate teams and those teams create DAGs of their own, all separate teams use the same Airflow Executor IAM Role. The consequence being that the teams can e.g. see each others’ S3 buckets. You can create more AWS Managed Airflow Environments - but that solution generates more expences since the service has considerable costs.

If the costs or the security issues prevent you from using AWS Managed Airflow, you can always use AWS Step Functions - both services are good for complex workflows.

Conclusions

If you need to create complex processes on AWS you have two excellent services to choose from: Amazon Managed Workflows for Apache Airflow, and AWS Step Functions.

The writer is working at a major international IT corporation building cloud infrastructures and implementing applications on top of those infrastructures.

Kari Marttila

Kari Marttila’s Home Page in LinkedIn: https://www.linkedin.com/in/karimarttila/