My First Generative AI Project

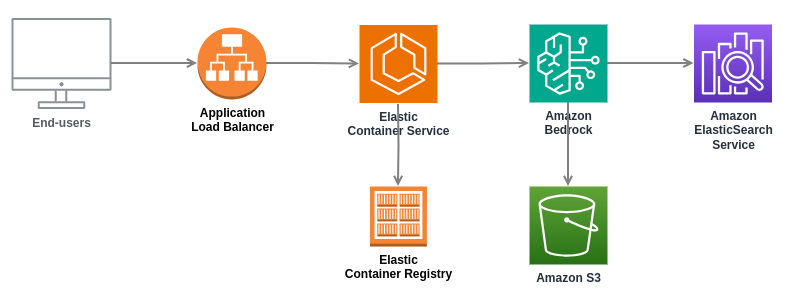

GenAI RAG Chatbot Architecture using AWS Services.

Introduction

In my previous blog post Generative AI with AWS Bedrock - First Impressions I wrote about my first impressions of the AWS Bedrock service. In this blog post, I will tell how I finalized my first generative AI project using the AWS services.

What is This Project About?

The project is a typical chatbot application. I implemented the chatbot application using Python in the backend, and Typescript / React in the frontend. The chatbot is designed for a specific domain and can answer questions in natural language. This type of chatbot is known as a Retrieval Augmented Generation (RAG) Chatbot. The project required using the AWS platform, specifically Amazon Bedrock service, which makes building and deploying generative AI models easy. Infrastructure was implemented using Terraform as an IaC tool.

Architecture and Infrastructure

I created the AWS infrastructure using Terraform. The infrastructure consists of the following main components (I omit some services, like IAM, VPC, CloudWatch, etc.):

- Application Load Balancer. The Application Load Balancer forwards the requests to the target group which is implemented using the ECS Service.

- Elastic Container Service. Elastic Container Service provides the runtime for the application.

- Elastic Container Registry. Elastic Container Registry stores the Docker images for the application. ECS pulls the Docker image from the ECR.

- S3. S3 is used to store the input documents to be indexed for the knowledge base.

- AWS Bedrock. AWS Bedrock is used to orchestrate RAG related functionality. AWS Bedrock uses the S3 as data source for the knowledge base, chunks and indexes the input documents, and stores the vectorized information in the OpenSearch Service. Bedrock uses OpenSearch Service to query the questions to find relevant information from the knowledge base (the RAG part). Bedrock then uses a large language model to generate the answer to the question.

- OpenSearch Service. OpenSearch Service is used as the data source for the AWS Bedrock knowledge base. It stores the vectorized information from the input documents.

I have used Terraform for almost ten years, so I am pretty competent with the tool. I created a fully automated and parametrizided infrastructure - using the same IaC code I can easily create e.g. various environments for customer demonstrations. The solution can also be used as a quick start for new generative AI projects in my corporation.

Application Development

The application development was done using Python in the backend, and Typescript / React in the frontend. This is an mvp application, so the application itself is rather minimalistic: just a simple web page to provide the question and a textbox for the answers. The backend provides just one API for the queries. This mvp application is enough to demonstrate the functionality of the chatbot, and it can later on be extended with more features in real customer cases.

The Python backend development using Python FastAPI was really a breeze. FastAPI is a modern, fast (high-performance), web framework for building APIs with Python. It provides a nice live reload feature, so the development was really fast (though, once again I really miss Clojure for the excellent Lisp repl and data-oriented programming language).

The integration to query the Bedrock knowledge base is really simple:

...

knowledgebase_id = settings.BEDROCK_KNOWLEDGEBASE_ID

aws_region = settings.AWS_REGION

foundation_model = settings.FOUNDATION_MODEL

model_arn = 'arn:aws:bedrock:' + aws_region + '::foundation-model/' + foundation_model

bedrock_client = boto3.client('bedrock-agent-runtime', aws_region)

def getAnswer(question):

knowledgeBaseResponse = bedrock_client.retrieve_and_generate(

input={'text': question},

retrieveAndGenerateConfiguration={

'knowledgeBaseConfiguration': {

'knowledgeBaseId': knowledgebase_id,

'modelArn': model_arn

},

'type': 'KNOWLEDGE_BASE'

})

return knowledgeBaseResponse

You can develop the backend using your AWS credentials, i.e., you run your backend locally and it connects to your AWS infrastructure (Bedrock knowledge base) in the cloud.

Conclusion

Generative AI is here. The public cloud providers have made it really easy to build generative AI applications. And it seems that the costs are coming down significantly.

I anticipate that simple RAG Chatbots will be offered as a public cloud service in the near future. This will make it really easy for companies to build chatbots for their specific domains. The real business will be to build various AI agents that use the domain knowledge bases and do various complex tasks for the companies.

Anyway, for a developer, we are living exciting times. With generative AI a developer is able to build big applications by himself/herself, that earlier required a whole team of developers (Using Copilot in Programming ). And now, you as a developer can build generative AI applications that will transform the society in a way that we cannot even imagine yet.